What are we learning about how consultancies adopt AI?

Explain, Show, Play, Review, Unwrap, Repack, Repeat

11 months of perpetual learning

We released our product (Discy AI) into (perpetual) beta last Summer.

Since then, onboarding new firms, teams, and users has been a rollercoaster. Adapting to their different styles and assumptions in adopting an AI tool into their workflow.

This isn't surprising.

While in theory, onboarding people onto a new tool sounds straightforward i.e.,

1) Explain the premise and walk them through. 2) Ensure power user(s) have access and clarity on key features & settings. 3) Unpack their first use case and how they'll use the platform to support it. 4) Establish open comms and frequent check-ins while they gain familiarity. 5) Provide a comprehensive user guide with text, images and videos.

The reality diverges somewhat.

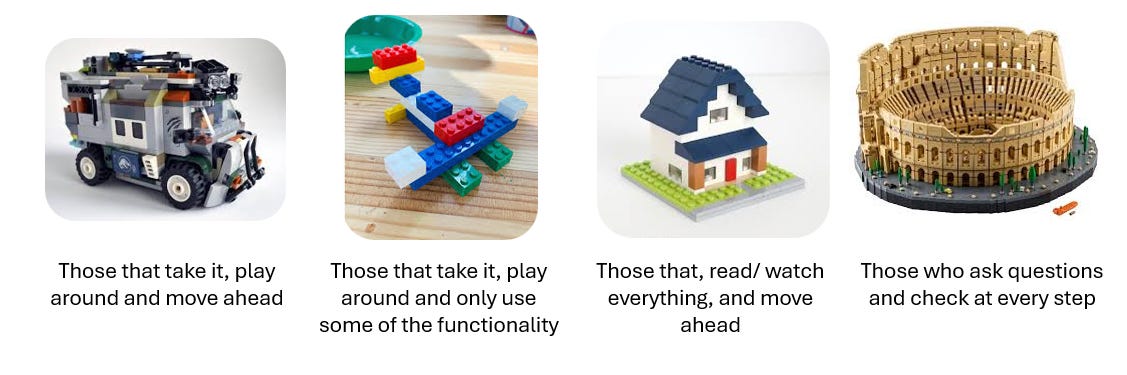

Across individuals within teams (within and across firms) we see a mix of...

And we get it.

Adopting a new tool means rewiring (potentially) years of habit. Especially, if we accept that humans extend our minds into our environment. I.e., even writing a thought on a Post-it, a notepad, or a whiteboard, is outsourcing some cognition.

This might be why some of us do everything in a spreadsheet -- or a slide deck.

Not, because it is the 'best tool for the job'. But because we have an established system for using that tool, to perform a cognitive act.

This is especially the case with the variable nature of Gen AI.

The big firm experience

Of course, our experience is with boutiques. Discy helps them catch and use new knowledge gained in discovery (a specific workflow).

In large firms, efforts to adopt AI revolve around existing knowledge management. I.e., give people an easier (chatbot) way to extract well-structured and synthesised answers from their knowledge stores. As well as find domain experts, relevant files, and search policies.

With similar themes arising in what they've shared about their AI adoption:

McKinsey's update on their Lilli rollout highlighted education and iteration. I.e., educating staff on what problems it could solve, and how to use it in their work. Ongoing iteration to make it less confusing, more accurate, and more useful to users.

Oliver Wyman emphasised communication and experimentation. I.e., highlighting what the risks were and core guardrails for use. Encouraging experimentation and for staff to think about the impact on their roles. Then building out features to support these use cases.

BCG's well-publicised study suggested adoption relied on education and rethinking workflows. I.e., educating staff on which tasks to use the tool, when not to rely upon it, and what to review for. Education needs a constant refresh as AI evolves and use cases grow.

Takeaway thoughts

For consulting;

AI needs to reinvent consulting workflows. While tools like Canva, Excalidraw, ARIS, Trello, and others are useful, they have been incremental uplifts on how consulting work is done. Not much beyond the cognitive processes that slides, spreadsheets, and docs offer. AI requires a deeper unpacking and repacking of what the work is.

Because of #1, AI is a more comprehensive transformation. It invites you to reflect on what you'd change to better support clients on their journey. E.g., The inputs you need, outputs you provide, and the way you do so. As well as the business model around this.

Most change disappoints. The Sunstone substack shares several, helpful principles for what a successful change needs. Part of this is being clear enough on the aspiration. Feeding that through the management systems and decision-making. E.g., if you aspire to forge high-value knowledge and the ability to do much more complex analysis. What tasks and workflows do you just stop? Which challenges should you invest time & funding to solve?

You can't force change. At most, you can influence the environment. Accept change as ongoing, and part of the firm's rhythm. Manage people's energy through it. Make it fun, and easy. A playground with clear boundaries. Tim has posted before about this. Oliver Wyman adopted the same stance.

Everyone is still learning. We don't know where we are on the AI journey. 'Good practice' to improve recall accuracy and precision from large language models change. Often. It is a constant experiment with people and processes (not just the tech). Empower people and let them innovate the way forward.

“Now it is Chat GPT. (But), there will always be a set of emerging technologies that really make us question how we work, how we use these things, how we deploy them quickly.”

- Paul Beswick, CIO, Marsh McLennan

A lot to cover in ~800 words!

No doubt some gaping gaps, but, I hope it is a helpful thought starter.

Oh and thanks for the mention!

Really interesting overview of the adoption process. Will be interesting to see the how much adoption affects the initial benefits of the change - hopefully they are self reinforcing.